How AI Search Picks Answers: LLM Rankings and Practical Optimization Steps

How AI Search Picks Answers: LLM Rankings and Practical Optimization Steps

What Large Language Models Do — and Why They Matter for Search

How LLMs Turn Natural Language Into Search Answers

Which LLMs Drive AI Search Today?

From Retrieval to Response: How AI Search Generates Results

What RAG Is and Why It Improves Accuracy

How LLMs Pick and Synthesize Sources

Key Ranking Signals for LLM‑Driven Search Visibility

How E‑E‑A‑T and Authority Affect LLM Rankings

Why Structured Data and Semantic Relevance Matter

Practical LLM SEO Steps for Small Businesses

How SERTBO’s Tools Help Improve AI Search Performance

How SERTBO’s AI Bots Boost Engagement and Lead Quality

How Automation Keeps Your LLM SEO Program Running

How to Measure and Iterate on AI Search Optimization

AI search pairs probabilistic language models with retrieval systems so users see concise, context-aware answers instead of a long list of links. This guide walks through how Large Language Models (LLMs) and retrieval‑augmented pipelines power modern AI search, why ranking looks different from traditional SEO, and what small businesses need to do to stay visible to generative AI. You’ll get a practical primer on tokens, context windows, embeddings, and RAG, plus the ranking signals LLMs prefer and step‑by‑step tactics to make your content and site systems retrievable. We’ll also cover where automation and AI bots fit into an ongoing LLM SEO program and how SERTBO helps SMBs put these tactics into practice. First, we define LLMs; then we explain how AI search generates answers; next, we outline ranking factors, SMB implementation steps, automation patterns, and measurement frameworks to protect and grow AI visibility.

What Large Language Models Do — and Why They Matter for Search

Large Language Models (LLMs) are neural networks trained on massive text sets that predict and generate tokens based on context. In AI search, they both interpret queries and craft direct answers. Because LLMs model meaning rather than just matching keywords, visibility now hinges on clear entities and structured knowledge. This means creators should understand tokenization, context windows, and prompt framing so their content can be accurately pulled and summarized by these systems.

How LLMs Turn Natural Language Into Search Answers

LLMs break text into tokens, place those tokens in a context window, and use learned probability patterns to predict the next tokens that form coherent responses. Outputs depend heavily on the prompt, prior conversation turns, and any documents the system retrieved. Because the context window limits how much the model can condition on, pages that put key facts and action phrases early are easier for models to use. Knowing this token‑level behavior helps you structure pages and queries so LLMs can extract and rephrase accurate facts for direct answers.

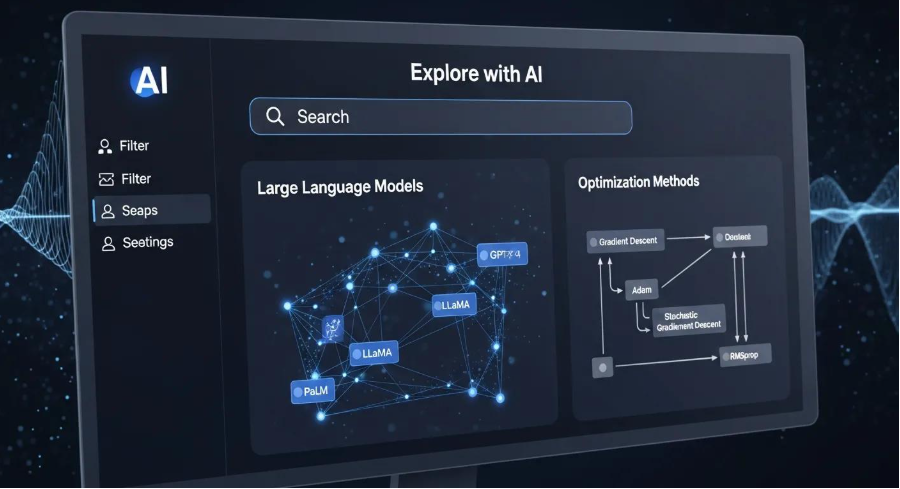

Which LLMs Drive AI Search Today?

Multiple LLM families shape AI search—some are tuned for long‑form synthesis, others for concise retrieval‑grounded answers, and many vendors mix models and retrieval layers. Models differ by size, training mix, and safety tuning, but the practical takeaway for content creators is the same: aim for semantic clarity, strong entity signals, and citation‑ready passages rather than model‑specific hacks. That model‑agnostic approach makes your content more resilient across different search products.

From Retrieval to Response: How AI Search Generates Results

AI search pipelines usually retrieve relevant documents, convert them to embeddings, then let an LLM synthesize a final answer—balancing breadth and factual grounding. Retrieval‑Augmented Generation (RAG) embeds both queries and documents, scores candidates by similarity, and supplies the top matches to the LLM as grounding. RAG reduces hallucination and allows for source attribution when the retrieval set is high quality. It also creates new optimization levers: strong embeddings, clear entity labels, and standalone FAQ‑style passages increase the chance your content is chosen as a source.

What RAG Is and Why It Improves Accuracy

RAG follows three steps: embed the query, retrieve top documents from a vector store, and synthesize a grounded answer with the LLM. Better embeddings and denser indexes raise selection precision, which makes the LLM’s output easier to fact‑check and attribute. For businesses, that means investing in concise, standalone passages that map cleanly to user intents. Balance trade‑offs like latency, index quality, and freshness when deciding which content to structure and how often to refresh it.

How LLMs Pick and Synthesize Sources

After retrieval, documents are scored and filtered; the LLM then uses extraction prompts or quote‑and‑rephrase patterns to assemble an answer and, where possible, cite sources. Candidate scoring looks at semantic similarity, recency, and on‑page signals like headings and schema. Prompt patterns that ask the model to extract sentences, cite sources, and flag uncertainty help reduce hallucination. If your pages include concise answer passages, explicit entity mentions, and clear metadata, they’re more likely to be picked and synthesized accurately.

Key Ranking Signals for LLM‑Driven Search Visibility

LLM search blends semantic and behavioral signals, often favoring entity clarity, structured data, and content authority over raw link counts. Core factors include E‑E‑A‑T (experience, expertise, authoritativeness, trustworthiness), explicit schema (FAQ, Article, Service), semantic relevance via embeddings, freshness, and engagement signals that show helpfulness. Together, these signals form a complex ranking surface where short, retrievable answer passages frequently outcompete long pages that lack explicit, structured claims. Below are the most impactful signals to prioritize.

These ranking mechanisms are nuanced, which makes evaluating LLM outputs and ranking behavior a technical challenge.

Understanding LLM Ranking Algorithms & Evaluation

Evaluating large language models is complex. Pairwise ranking—having humans compare two outputs and record preferences—has become a common method for measuring quality across models. Aggregation techniques like Elo help build overall rankings, but applying these approaches to model evaluation introduces challenges, including inconsistent results and sensitivity to evaluation criteria.

Utilizing LLMs for Enhancing Search Engine Optimization Strategies in Digital Marketing, T Samarah, 2025

Common LLM ranking signals include:

E‑E‑A‑T and authority: Content backed by real experience and clear expertise is more likely to be selected for direct answers.

Structured data and schema: Machine‑readable markup helps retrieval systems map entities and facts for RAG.

Semantic relevance (embeddings): Text that aligns cleanly in embedding space ranks better for meaning‑based queries.

Freshness and timeliness: Recently updated facts are favored for time‑sensitive queries.

Concise answer passages: Short, self‑contained passages that directly answer intent are preferred.

Engagement signals: Interaction metrics that show helpfulness can boost selection and ranking.

Balancing authority, clear structure, and answer engineering increases your chances of being chosen for AI answers.

Below is a quick comparison to help prioritize work by signal, characteristic, and action.

This makes E‑E‑A‑T and structured data foundational, while semantic relevance and freshness are tactical levers you can use to move the needle.

How E‑E‑A‑T and Authority Affect LLM Rankings

E‑E‑A‑T matters because retrieval layers and models prefer sources that show verifiable expertise and trust signals, especially on queries where accuracy matters. Content that documents experience or cites primary sources gets a higher retrieval probability, while vague or unsupported pages risk being misused or misquoted. Add author bios, reference studies, and structure credentials so retrieval systems and models can evaluate authority more reliably. That also improves how users perceive any answer snippets generated from your content.

Why Structured Data and Semantic Relevance Matter

Structured data exposes entity fields in machine‑readable form, letting retrieval systems index discrete facts and improving embedding alignment. Schema types like Article, FAQPage, and Service signal intent and role for page elements; clear entity names reduce ambiguity during retrieval. Optimizing image alt text and filenames with entity descriptors helps multimodal retrieval. Combining schema with entity‑focused copy makes content easier to find in vector searches and simpler for LLMs to synthesize into accurate, attributable answers.

Practical LLM SEO Steps for Small Businesses

Small businesses can earn LLM visibility by prioritizing entity maps, concise answer passages, and structured data—and by automating routine tasks to scale freshness and engagement signals. Start with an entity inventory for core products and services, then build hub‑and‑spoke clusters with short, direct passages optimized for People Also Ask and snippet‑style prompts. Add FAQ schema, use action verbs in headings, and include a self‑contained 40–120 word passage that answers a single high‑intent query on each page. Those steps make your content easier to retrieve and synthesize for AI search.

That said, smaller teams often face practical constraints when adopting these tactics, which recent research underscores.

LLM‑Driven SEO Strategies for Small Businesses

Implementing LLM approaches for SEO brings challenges—data quality and availability are common hurdles, especially for smaller organizations that lack dedicated resources.

Ranking unraveled: Recipes for LLM rankings in head-to-head ai combat, R Daynauth, 2025

Use this practical checklist:

Map entities: List your primary products, services, and business entities and link them to canonical pages and FAQs.

Create answer passages: Write short, self‑contained passages that address one query intent at a time.

Add schema: Implement Article, FAQPage, and Service schema to expose facts to retrieval layers.

Optimize action keywords: Put verbs and clear CTAs in headings to match conversion intent.

Maintain freshness: Schedule regular updates for hubs and supporting cluster pages.

Many SMBs tie these tactics into automation and lead‑capture workflows. SERTBO consolidates lead capture across channels, enables real‑time customer contact via web chat, SMS, and AI bots, and automates email and text follow‑up. For SMBs that need to operationalize content freshness and convert AI‑driven intent into leads, SERTBO can automate FAQ ingestion, schedule content updates, and map captured intents into campaign triggers. Our free audit helps identify priority pages and flows for immediate LLM impact and shows how to turn visibility into actionable leads without splintering your tech stack.

How SERTBO’s Tools Help Improve AI Search Performance

SERTBO’s automation and marketing tools support the LLM signals that matter most—engagement, freshness, and structured answers—by centralizing capture and follow‑up. AI bots and real‑time messaging convert vague visitor signals into structured data that content teams can use to craft better answer passages and FAQs. Automated campaigns follow up on leads captured from AI answers, turning visibility into measurable conversions while keeping the content cadence search systems reward. These integrated capabilities let SMBs scale LLM‑focused optimization without piling on new tools.

How SERTBO’s AI Bots Boost Engagement and Lead Quality

Our AI bots ask clarifying questions, surface intent, and capture structured responses that can be stored as discrete data points in your CRM. Those interactions increase on‑page engagement (lowering bounce and improving helpfulness metrics) and produce short, copy‑ready snippets you can add to FAQs or knowledge bases to improve retrieval. Leads are tagged with intent labels and can automatically trigger targeted email or SMS sequences—preserving conversation context for later personalization. For SMBs, this generally means higher‑quality leads and a steady stream of real user queries to refine answer passages.

How Automation Keeps Your LLM SEO Program Running

Automation patterns that support LLM SEO include scheduled ingestion of bot Q&A into knowledge bases, automated schema updates when answers change, and workflows that trigger content refreshes after product or policy updates. These reduce manual work and keep hub pages current, which improves retrieval scores for freshness‑sensitive queries. Consolidating chat, capture, and campaign automation shortens the time from insight to content update. SERTBO’s automation suite is built to support these patterns and keep your content hygiene systematic—which LLM search systems reward.

How to Measure and Iterate on AI Search Optimization

Measuring LLM SEO blends traditional KPIs with new signals that show visibility, selection by models, and downstream conversions. Focus on metrics that link content to answers and business outcomes: AI answer appearances, citation rates, engagement on source pages, conversion from AI traffic, and adherence to freshness cadence. Tools that surface embedding similarity, retrieval logs, and model citation events are especially helpful. Combine automated telemetry with periodic manual audits to catch attribution gaps and topical drift. A steady measurement rhythm enables data‑driven pruning and expansion of your answer passages.

Recommended KPIs and tracking tools include:

AI answer appearances: How often your pages are used as sources in AI outputs.

Retrieval hit rate: Percent of queries that return your documents in the top‑k retrieval results.

Engagement metrics: Dwell time, bounce rate, and session depth on pages that feed answers.

Conversion rate by source: Leads or actions attributable to AI‑driven traffic.

Content freshness compliance: Share of hub pages updated on the planned schedule.

Tools and KPIs to Track LLM SEO and AI Visibility

Track LLM SEO by combining search analytics, vector retrieval logs, and lead attribution. Use query logs to surface high‑value intents, monitor embedding similarity to detect topical drift, and follow conversion funnels for pages that serve as answer sources. Regular manual audits—checking that target passages are concise, schema‑marked, and evidence‑backed—complement telemetry. Together, these practices let teams iterate on content, reindex strategically, and validate that changes increase retrieval and conversion metrics. A consistent audit cadence keeps content aligned with evolving LLM behavior.

Why Ongoing Freshness Matters for LLM Rankings

Fresh content signals updated facts and recent experience to retrieval layers, raising the chance a page will be selected for time‑sensitive queries and reducing the model’s reliance on older sources. Small edits—adding a new FAQ, updating a stat, or inserting recent customer questions captured by bots—can refresh a passage in indexes and improve selection probability. We recommend quarterly audits for hub pages and bi‑annual reviews for supporting clusters, with alerts for high‑impact changes. Keeping content fresh helps LLMs retrieve the most current, authoritative passages for synthesis and citation.

SERTBO’s free audit can highlight your highest‑impact pages and the workflows to automate freshness. If you’re ready to convert better AI visibility into leads, our audit and services show quick wins and implementation paths tuned to your traffic and resources.

Frequently Asked Questions

How is AI search optimization different from traditional SEO?

Traditional SEO focuses on keywords, backlinks, and ranking signals driven by link authority. AI search optimization shifts the emphasis to user intent, semantic relevance, and structured content. LLM‑powered systems prefer concise, context‑aware answers over a ranked list of links, so you should prioritize entity clarity, machine‑readable schema, and short answer passages that map directly to queries.

What can small businesses do to optimize for AI search?

Start with clear, entity‑rich passages that answer common questions. Add FAQ schema and other relevant structured data. Keep a content freshness plan and map core entities to landing pages. Make sure each page has at least one 40–120 word passage that cleanly answers a high‑intent query—those passages are the easiest for retrieval systems to surface and for LLMs to cite.

Does user engagement matter for AI search rankings?

Yes. Engagement signals like dwell time, bounce rate, and interaction indicate helpfulness and relevance. High engagement can improve the chance a page is used as a source. Focus on useful, scannable content that encourages users to stay and interact.

How often should content be refreshed for AI visibility?

Freshness matters, especially for time‑sensitive topics. We recommend quarterly audits for hub pages and bi‑annual reviews for cluster content, with faster updates for pages tied to current events or product changes. Small, frequent edits—new FAQs or updated stats—can be enough to refresh retrieval scores.

What challenges do small businesses face with LLM SEO?

Common challenges include limited content resources, maintaining data quality, and the technical work needed for structured data. Rapid changes in AI behavior add complexity. To scale effectively, many small teams use automation tools (like SERTBO) to capture intent, feed content pipelines, and schedule updates without heavy manual effort.

How do I know if my AI search optimization is working?

Track AI answer appearances, retrieval hit rates, engagement on pages used as sources, and conversion rates from AI traffic. Tools that show embedding similarity, retrieval logs, and citation events help with attribution. Combine automated tracking with manual audits to confirm that changes actually improve retrieval and conversions.

Conclusion

AI search reshapes how visibility works. By understanding LLMs and focusing on entity clarity, structured data, concise answer passages, and regular content freshness, businesses can increase their chances of being selected for direct answers. Start with an entity map, add schema, automate capture and updates where possible, and measure the signals that tie content to outcomes. That practical approach will keep your content relevant and authoritative as AI search evolves.

Ready to take your content strategy to the next level? Contact us today to ensure your business stands out in the evolving AI search landscape.